Python

Scorecard helps you evaluate the performance of your LLM app to ship faster with more confidence! In this quickstart we will:

- Setup Scorecard

- Create a Testset

- Create an example LLM app with OpenAI

- Define the evaluation setup

- Score the LLM app with the Testset

- Review evaluation results in the Scorecard UI

Steps

Setup

First let’s create a Scorecard account and find the SCORECARD_API_KEY in the settings. Since this example creates a simple LLM application using OpenAI, get an OpenAI API key. Set both API keys as environment variables as shown below. Additionally, install the Scorecard and OpenAI Python libraries:

Create a Testset and Add Testcases

A Testset is a collection of Testcases used to evaluate the performance of an LLM application across a variety of inputs and scenarios. A Testcase is a single input to an LLM that is used for scoring. Now let’s create a Testset and add some Testcases using the Scorecard Python SDK:

Create a Simple LLM App

Next let’s create a simple LLM application which we will be evaluating using Scorecard. This LLM application is represented with the following function that sends a request with a user-defined input to the OpenAI API Here we’ve created a system that will be a helpful assistant in response to our input user query.

Create Metrics

Now that we have a system that answers questions from the MMLU dataset, let’s build a metric to understand how relevent the system responses are to our user query. Let’s go to the Scoring Lab and select “New Metric”

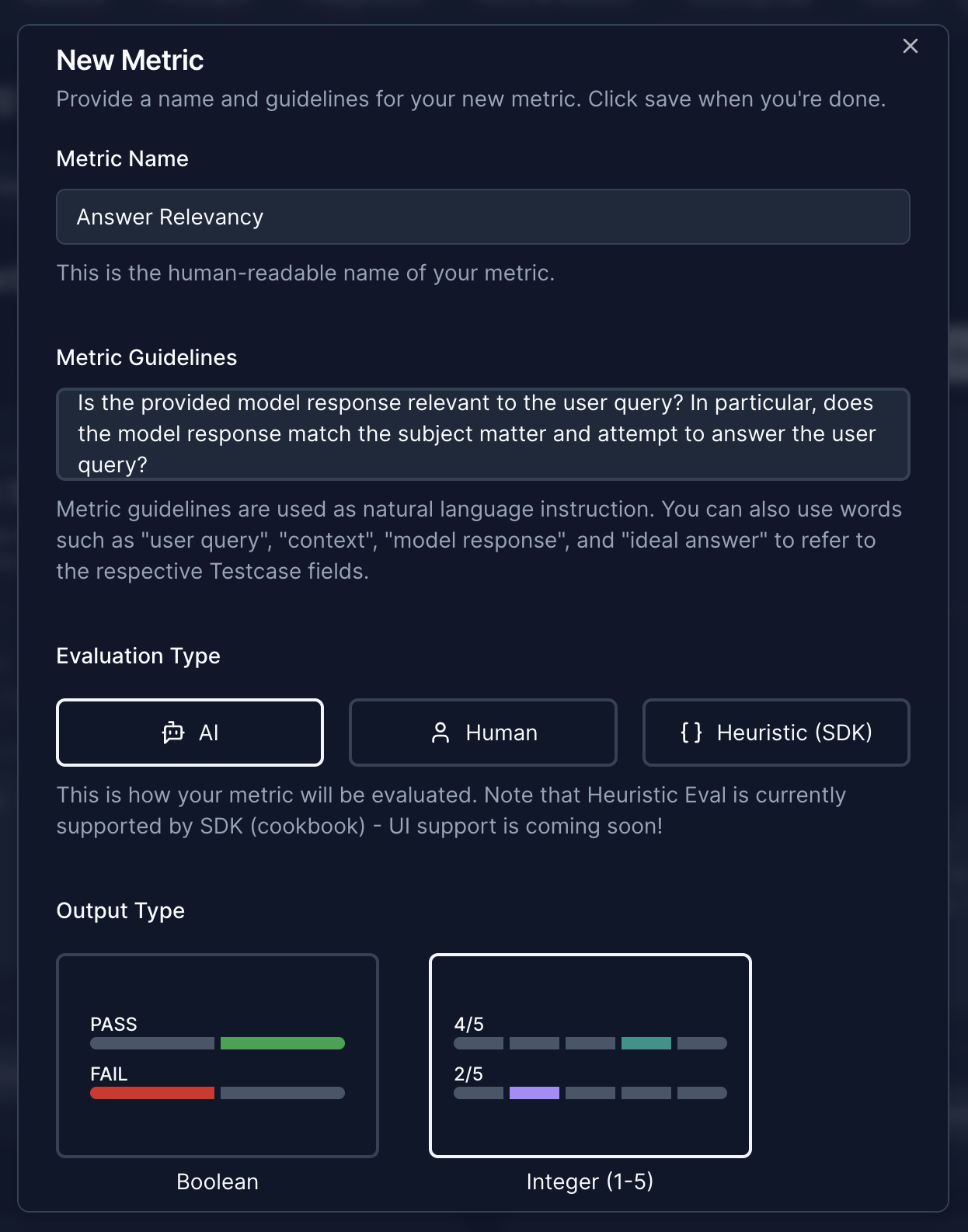

From here let’s create a metric for answer relevency:

Defining the Metric Answer Relevancy

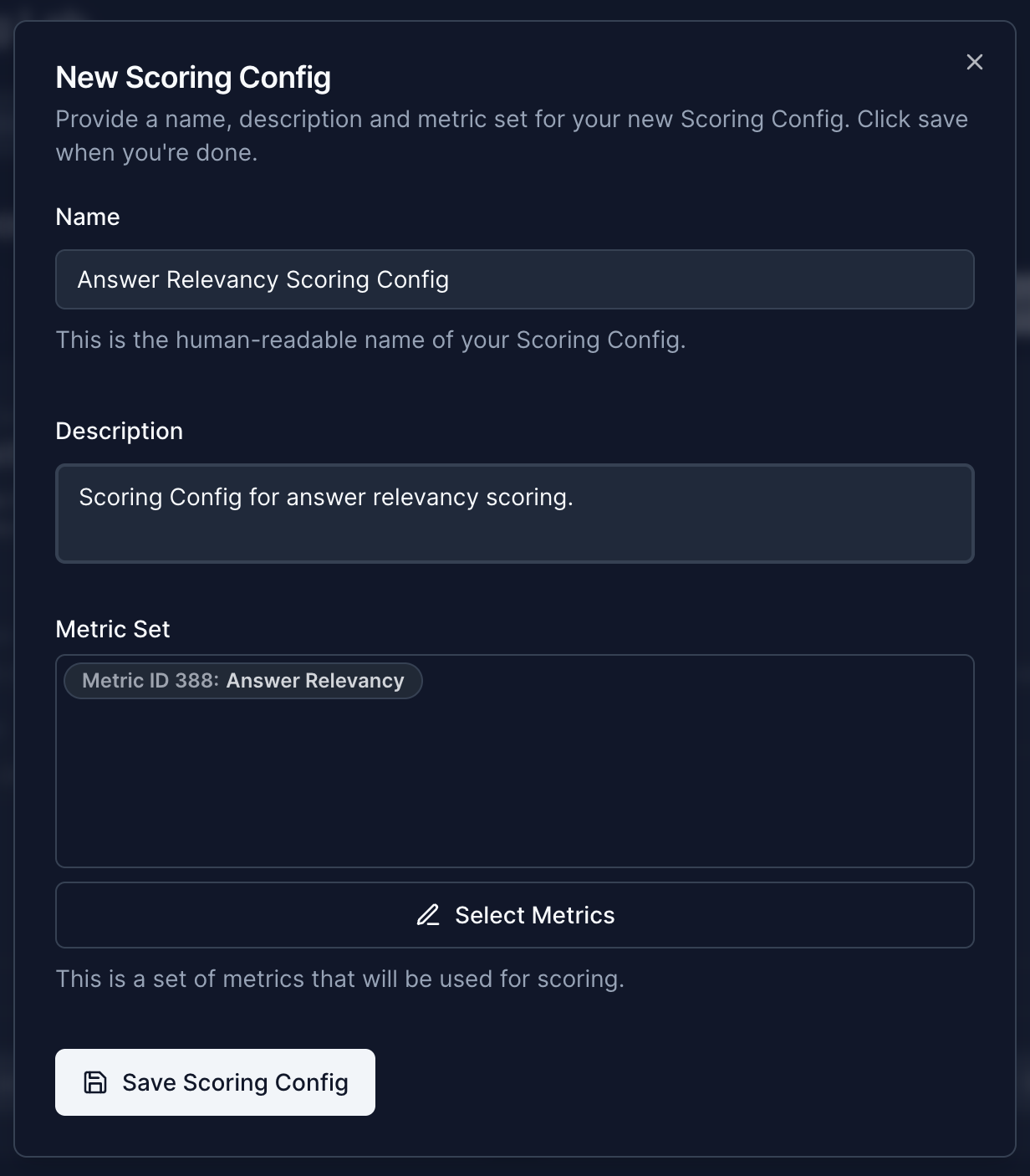

Defining the Metric Answer RelevancyYou can evaluate your LLM systems with one or multiple metrics. A good practice is to routinely test the LLM system with the same metrics for a specific use case. For this, Scorecard offers to define Scoring Configs, collection of metrics to be used to consistently evaluate LLM use cases with the same metrics. For the quick start, we will create a Scoring Config just including the previously created Answer Relevancy metric. Let’s head over to the “Scoring Config” tab in the Scoring Lab and create this Scoring Config. Let’s grab that Scoring Config ID for later:

Create a Scoring Config Including the Answer Relevancy Metric

Create a Scoring Config Including the Answer Relevancy MetricCreate Test System

Now let’s use our mock system and run our Testset against it replacing the Testset id below with the Testset from before and the Scoring Config ID above: