Boost Your LLM Evaluation Game With Scorecard’s Best-in-Class Metrics

When starting out with a LLM application, you begin with a “vibe check” by running a few prompts manually to see if the responses make sense. Then, you take it a step further by manually testing a set of your favorite prompts after each LLM iteration. However, you might wonder how to quantify and consistently test the quality of LLM responses. This is when you need metrics. A metric is a standard of measurement. In LLM evaluation, a metric serves as a benchmark for assessing the quality of LLM responses.

Assess Your LLM Quality With Scorecard’s Metrics

Scorecard assists you in several ways in defining metrics to use for LLM evaluation. Let’s check out some of the advantages that Scorecard offers!

Build on Scorecard’s LLM Evaluation Expertise and Use Core Metrics

The Scorecard team consists of experts in LLM evaluation, with extensive experience in assessing and deploying large-scale AI applications at some of the world’s leading companies. Our Scorecard Core Metrics, validated by our team, represent industry-standard benchmarks for LLM performance. You can explore these Core Metrics in the Scoring Lab, under “Metric Templates,” to select the ones best suited for your LLM evaluation needs.

Overview of Scorecard Core Metrics in Metric Templates

Overview of Scorecard Core Metrics in Metric TemplatesIf you would like to use a specific Core Metric, simply make a copy of it and, if needed, edit its details before importing it into your metrics section.

Metric Template to Copy

Metric Template to CopySecond-Party Metrics from MLflow

Scorecard provides support for running MLflow metrics directly within the Scorecard platform and provides complementary additional capabilities such as aggregation, A/B comparison, iteration and more. Several of MLflow’s metrics of the genai package are available in the Scorecard metrics library. These metrics include:

- Relevance

- Description: Evaluates the appropriateness, significance, and applicability of an LLM’s output in relation to the input and context.

- Purpose: Assesses how well the model’s response aligns with the expected context or intent of the input.

- Answer Relevance

- Description: Assesses the relevance of a response provided by an LLM, considering the accuracy and applicability of the response to the given query.

- Purpose: Focuses specifically on the relevance of responses in the context of queries posed to the LLM.

- Faithfulness

- Description: Measures the factual consistency of an LLM’s output with the provided context.

- Purpose: Ensures that the model’s responses are not only relevant but also accurate and trustworthy, based on the information available in the context.

- Answer Correctness

- Description: Evaluates the accuracy of the provided output based on a given “ground truth” or expected response.

- Purpose: Crucial for understanding how well an LLM can generate correct and reliable responses to specific queries.

- Answer Similarity

- Description: Assesses the semantic similarity between the model’s output and the provided targets or “ground truth”.

- Purpose: Used to evaluate how closely the generated response matches the expected response in terms of meaning and content.

You can view the full prompts for these metrics in the Scorecard Scoring lab or in the MLflow GitHub repository.

Second-Party Metrics from RAGAS

You can also utilize metrics from the RAGAS framework, which is specialized in evaluating Retrieval Augmented Generation (RAG) pipelines. Scorecard also provides complementary additional capabilities such as aggregation, A/B comparison, iteration, and more.

Component-Wise Evaluation

To assess the performance of individual components within a RAG pipeline, you can leverage metrics such as:

- Faithfulness: Measures the factual consistency of the generated response against the provided context.

- Answer Relevancy: Assesses how pertinent the generated response is to the given prompt.

- Context Recall: Evaluates the extent to which the retrieved context aligns with the annotated response.

- Context Precision: Determines whether all ground-truth relevant items present in the contexts are ranked higher.

- Context Relevancy: Evaluates how relevant the retrieved context is in addressing the provided query.

End-to-End Evaluation

To assess the end-to-end performance of a RAG pipeline, you can leverage metrics such as:

- Answer Semantic Similarity: Assesses the semantic resemblance between the generated response and the ground truth.

- Answer Correctness: Gauges the accuracy of the generated response when compared to the ground truth.

You can view the full prompts for these metrics in the Scorecard Scoring lab or in the RAGAS GitHub repository.

Define Custom Metrics for Your LLM Use Case

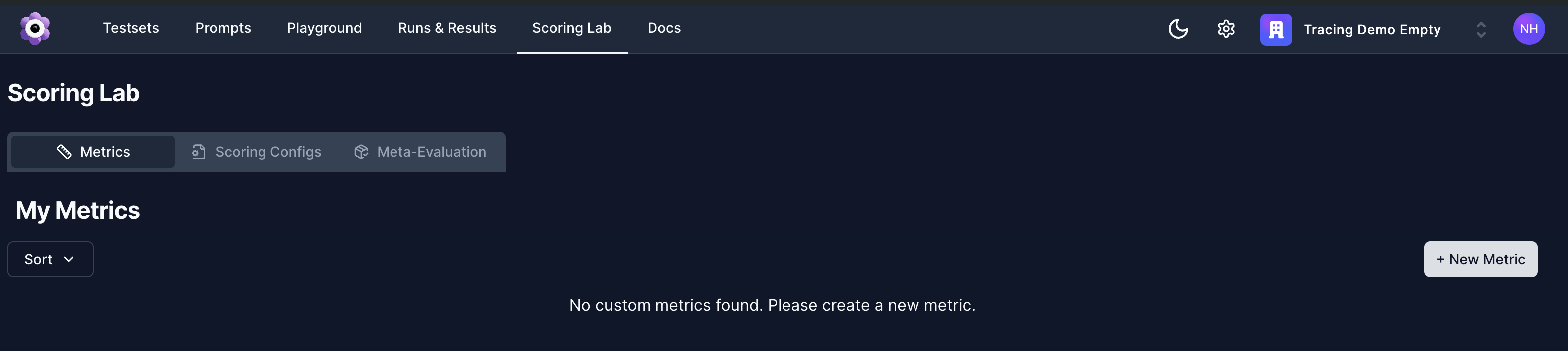

Adding a New Custom Metric in Scoring Lab

Adding a New Custom Metric in Scoring LabScorecard also offers the flexibility to define any customized metric unique to your LLM application. At the top of the metrics section in the Scoring Lab, click the ”+ New Metric” button and fill out the details of your metric. Information needed includes:

- Metric Name: a human-readable name of your metric.

- Metric Guidelines: natural language instructions to define how a metric should be computed.

- Evaluation Type: how your metric will be evaluated

- AI: takes the Metric Guidelines as prompt and computes the metric via an AI model.

- Human: a human subject-matter expert manually evaluates the metric.

- Heuristic (SDK): this is currently only supported via SDK (check out this cookbook) - UI support is coming soon! 🚀

- Output Type: the output type of your metric.

- Boolean

- Integer (1-5)

New Custom Metric

New Custom MetricConsistently Evaluate LLM Applications in Multiple Dimensions With Scoring Configs

Once you have defined all metrics that you need to properly assess the quality of your LLM application, you can group them together to form of Scoring Config. A Scorecard Scoring Config is a collection of metrics that are used together to score an LLM application. This Scoring Config can be routinely run against your LLM application to yield a consistent measure of the performance and quality of its responses. Instead of manually selecting multiple metrics to score an LLM application every time for a particular use case, defining this set of metrics makes it easy to repeatedly score in the same way with multiple metrics. Different Scoring Configs can serve different purposes by ensuring consistency in testing, e.g. a Scoring Config for RAG applications, a Scoring Config for translation use cases, etc.

Overview of Scoring Configs in Scoring Lab

Overview of Scoring Configs in Scoring LabHave an overview of your existing Scoring Configs in the Scoring Lab under “Scoring Configs”. In this tab, you can also define a new Scoring Config by providing the following information:

- Name: a human-readable name of your Scoring Config.

- Description: a short description of your Scoring Config.

Defining a New Scoring Config

Defining a New Scoring ConfigAfter specifying a name and description, select metrics by clicking on “Select Metrics”. On the next page, select one or multiple metrics to use for the Scoring Config.

Selecting Metrics for a New Scoring Config

Selecting Metrics for a New Scoring Config